Virtual Objects You Can Touch

Thu 26 August 2021 by Jim Purbrick

Now that Horizon Workrooms has launched I’m very happy to be able to write about the functionality that I found most exciting while building the experience: the mapping of virtual objects to their real world counterparts.

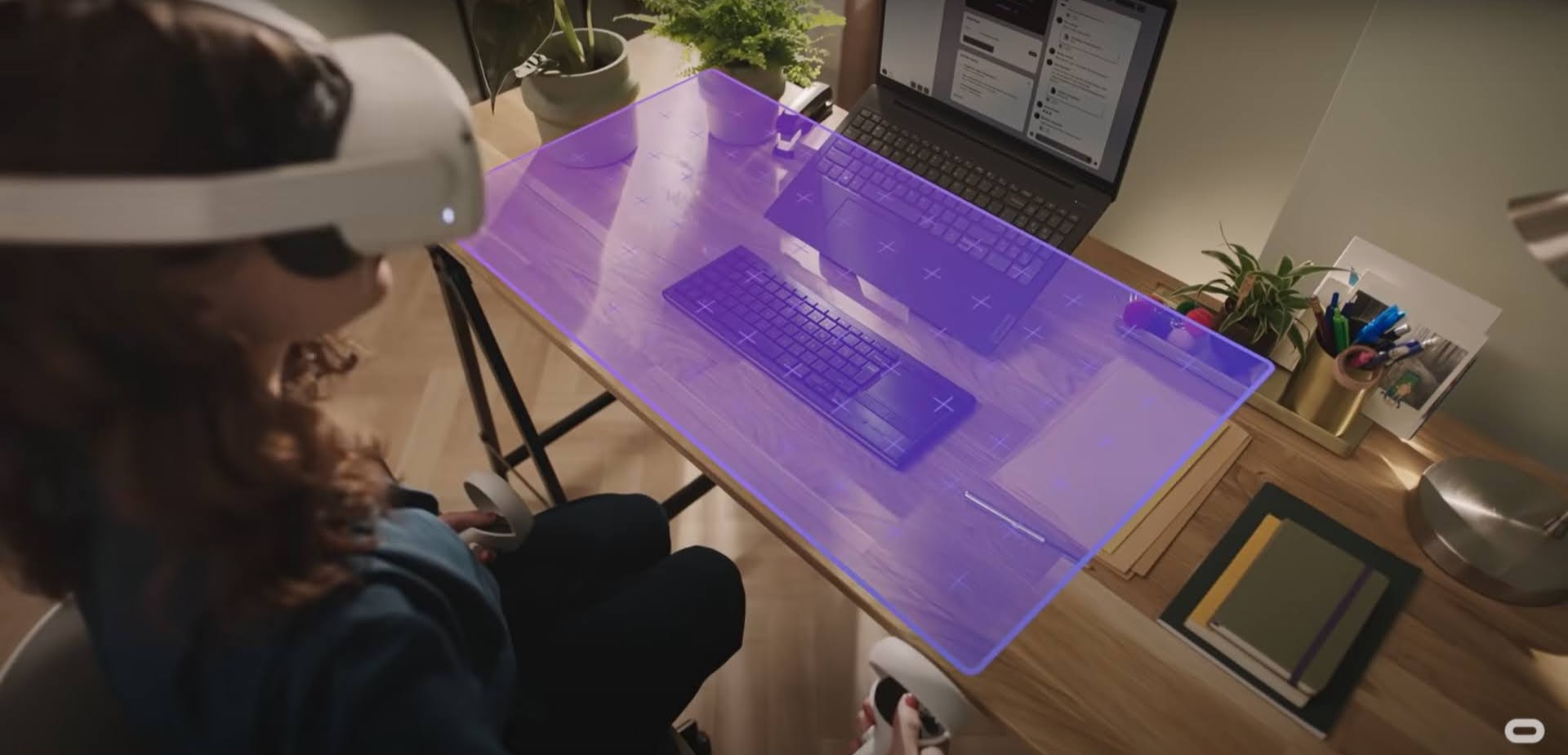

Typically augmented and mixed reality experiences overlay real world objects with virtual annotations. An AR app on your phone might recognize a face seen by the camera and show the face with added bunny ears on the phone screen, for example. Workrooms is different in that it shows you an entirely virtual environment, but asks you to indicate the position of real world objects like your desk in a process which feels like a more detailed guardian setup process. Workrooms then positions your avatar in the virtual world so that your real desktop aligns with your virtual desktop. When you reach out and touch the virtual desk your hands touch the real desk. As long as the important virtual objects within arms reach are mapped to real objects, the virtual environment can be much bigger than the available real space while maintaining the illusion that every virtual object can be touched as well as seen.

In addition to adding to the immersion and realism of the experience, the haptic feedback has important practical benefits. Typing on a virtual keyboard is much easier if you’re also typing on a real keyboard rather than on a flat featureless surface or just moving your fingers in space; it is much less tiring to draw on a virtual whiteboard if you are also leaning on a real wall and it’s much less dangerous to show someone a virtual desk if leaning on it or putting a coffee on it doesn’t result in you falling over or spilling coffee on your feet. While requiring a suite of real objects to be available puts some additional constraints on using the application, the required objects are common enough in the case of Workrooms that the trade off is often worthwhile and less onerous than requiring the use of specialised additional VR hardware to provide much lower fidelity haptic feedback.

Mapping real objects to virtual objects can also go beyond providing haptic feedback by being used to provide a very natural indication of intent. Moving from a seated desk to a nearby wall in the real world can be used to indicate to an application the intent to use a whiteboard and might result in teleporting in the virtual world to a whiteboard location much further away, allowing people to navigate virtual spaces which are much larger than the available space in the real world.

Using real objects that can be touched shifts the problem of providing haptic feedback from one requiring complex mechanical force feedback devices to one that, in future, would use computer vision to recognise objects in the real environment using the same cameras that provide inside-out tracking capabilities in modern VR headsets. By using virtual objects that map exactly to their real counterparts the haptic feedback provided would be perfect. A real MacBook keyboard feels exactly like a MacBook keyboard when you touch it because it is a MacBook keyboard, whereas any force feedback device trying to synthesize the same haptic feedback could only ever provide an approximation.

While the relative ubiquity of home offices means that this mapping approach lends itself to an experience like Workrooms, it’s also exciting to think about how similar approaches could be used to add perfect haptic feedback to less mundane VR experiences in more fantastic virtual environments. The Workrooms team was lucky enough to see some of those possibilities during a team offsite to the Star Wars VR experience at the Void in London. The experience created the illusion of a large virtual environment using a much smaller real space by using tricks like having participants moving through a real doorway into a virtual elevator and then later exiting through the same doorway to a new virtual room once the elevator had virtually moved to a new floor in the virtual environment.

With the increasing availability of headsets which use SLAM based inside-out tracking and the development of techniques like redirected walking, it’s possible to imagine a future where rather than defining a safe cuboid within the real world, guardian systems could instead map an entire home or office, identifying doors, chairs, tables and other objects which could be enumerated via an API to VR applications. The application could then use the available real world features to generate huge custom fantasy environments in a rogue-like game in which every wall and table can be leant on, every door can be opened and closed, every chair sat in and potentially every staircase climbed. I’m very excited to see, hear and touch the experiences these techniques might enable in the near future.

A Past And Present Future Of Work

Over the last few years I’ve spent a lot of time helping people new to virtual worlds learn how they work. Over the last few weeks I’ve been sharing a series of short posts on some of the high level concepts I covered which will hopefully be useful …

read moreSmall Places Loosely Joined

Over the last few years I’ve spent a lot of time helping people new to virtual worlds learn how they work. Over the next few weeks I’m sharing a series of short posts on some of the high level concepts I covered which will hopefully be useful to …

read moreA Tall Dark Stranger

Over the past few years I’ve spent a lot of time helping people new to virtual worlds understand how they work. Over the next few weeks I’m going to share a series of short posts on some of the high level concepts I covered which will hopefully be …

read moreThe Conversation Around Content

Over the last few years I’ve spent a lot of time helping people new to virtual worlds learn how they work. Over the next few weeks I’m going to share a series of short posts on some of the high level concepts I covered which will hopefully be …

read moreBuilding Safety in to Social VR

Last year I hosted a panel on creating a safe environment for people in VR with Tony Sheng and Darshan Shankar at OC3. I commented at the time that the discussion reminded me of the story of LambdaMOO becoming a self-governing community told by Julian Dibbell in My Tiny Life …

read moreCreating A Safe Environment For People In VR

Strange Tales From Other Worlds

At the end of last year, Michael Brunton-Spall and Jon Topper asked me if I would like to give the opening keynote at Scale Summit as I had “lots of experience scaling weird things”, by which they meant Second Life and EVE Online. I immediately thought of The Corn Field …

read moreAnother Age Must Be The Judge

Almost exactly 6 years ago, the incredible Cory Ondrejka and I met for the first time in real life (having previously blogged together on Terra Nova) at the Austin Game Conference 2004, where we got on like a house on fire. Several months later I joined Linden Lab and (as …

read moreLang.NET 3 Years On

It was incredibly satisfying to be able to go back to Lang.NET 3 years on to be able to say that we actually managed to make all the crazy plans we had for Mono in 2006 work. My talk is now online here. Lots of people hadn’t seen …

read moreBabbage Linden In Real Life

When I heard that the theme for the Linden Lab Christmas party was going to be steam punk, I knew I had to go as Babbage Linden. Since 2005 my avatar in Second Life has sported a victorian suit from Neverland and a steam arm, originally from a Steambot avatar …

When I heard that the theme for the Linden Lab Christmas party was going to be steam punk, I knew I had to go as Babbage Linden. Since 2005 my avatar in Second Life has sported a victorian suit from Neverland and a steam arm, originally from a Steambot avatar …

The Creation Engine No. 2

The Creation Engine No. 2