BL:AM

Sat 07 October 2023 by Jim PurbrickAfter The Spirit of Gravity in February I ended up talking to Jason Hotchkiss and Jo Summers. I knew Jason from a Build Brighton guitar pedal workshop years ago and as a sound artist from Sound Plotting, but Jo knew Jason as the game developer who made 1D Pong. After talking about 1D Pong and another 1D dungeon crawler we started thinking about the most ridiculous 1D game we could imagine in 2023 and settled on 1D, 1 button battle royale.

Normally that’s where the story would end, but for some reason the idea kept rattling around in my head and eventually I ended up putting my thoughts down in a Google Doc and started thinking about how to make it happen.

To be a battle royale the game had to be multiplayer with players on a shared map trying to shoot each other, but a 1D display meant the gameplay couldn’t come from aiming. Judging the distance to shoot by charging and launching a missile solved this problem and provided a fun core gameplay loop which could be implemented with a single button. Hold the button to charge the missile then release the button to fire a distance dependent on the time the button was held.

With the button used for shooting, moving players around had to rely on another key feature of battle royale games: the shrinking map. The game would start with a large map filling the display and would position players randomly as they joined. Periodically the map would shrink and randomize the player positions within the smaller play area. This would create a natural tempo increase in the game as players would move closer together, so players could fire shorter distances and so fire more often. Eventually all the players would be next to each other, so just tapping a button would always result in hitting a player, ensuring the game came to a swift end.

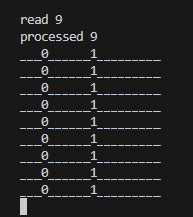

Jason had already suggested the Raspberry Pi Pico as a potential hardware platform, so I built a prototype as a Linux terminal application which outputs frames as lines of ASCII to quickly test the design in a way that might be easy to port. The first prototypes were surprisingly fun. Some tweaking of the map resize period gave good players enough time to range-find long distances while not making weaker players wait too long if they were a long way from the action. As the map got smaller the action got more frantic before ending in a flurry of button mashing as planned.

The slow pace at the start gave players enough time to learn how to play, but could feel frustrating when all the targets were at the other end of the map. A solution came by adding loot boxes, another feature common to battle royale games. Scattering loot boxes across the map meant players would always have something nearby to shoot, helped maps feel more varied and provided a way to add depth via power-ups and another way to move around via teleporters.

Loot boxes also added another interesting gameplay choice: should I try to snipe an opponent immediately or get tooled up first? The initial design always fired missiles at the nearest target, which Jo highlighted as a problem as it meant you couldn’t always pick your target. An easy solution was to alternate the direction of fire. Just tapping the button meant players could quickly launch a missile in the wrong direction before getting back to carefully charging a shot to fire in the right direction again.

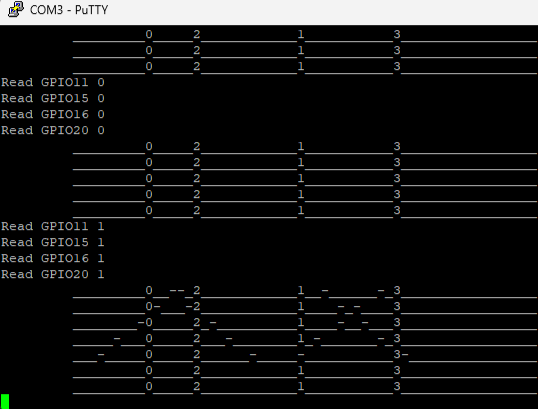

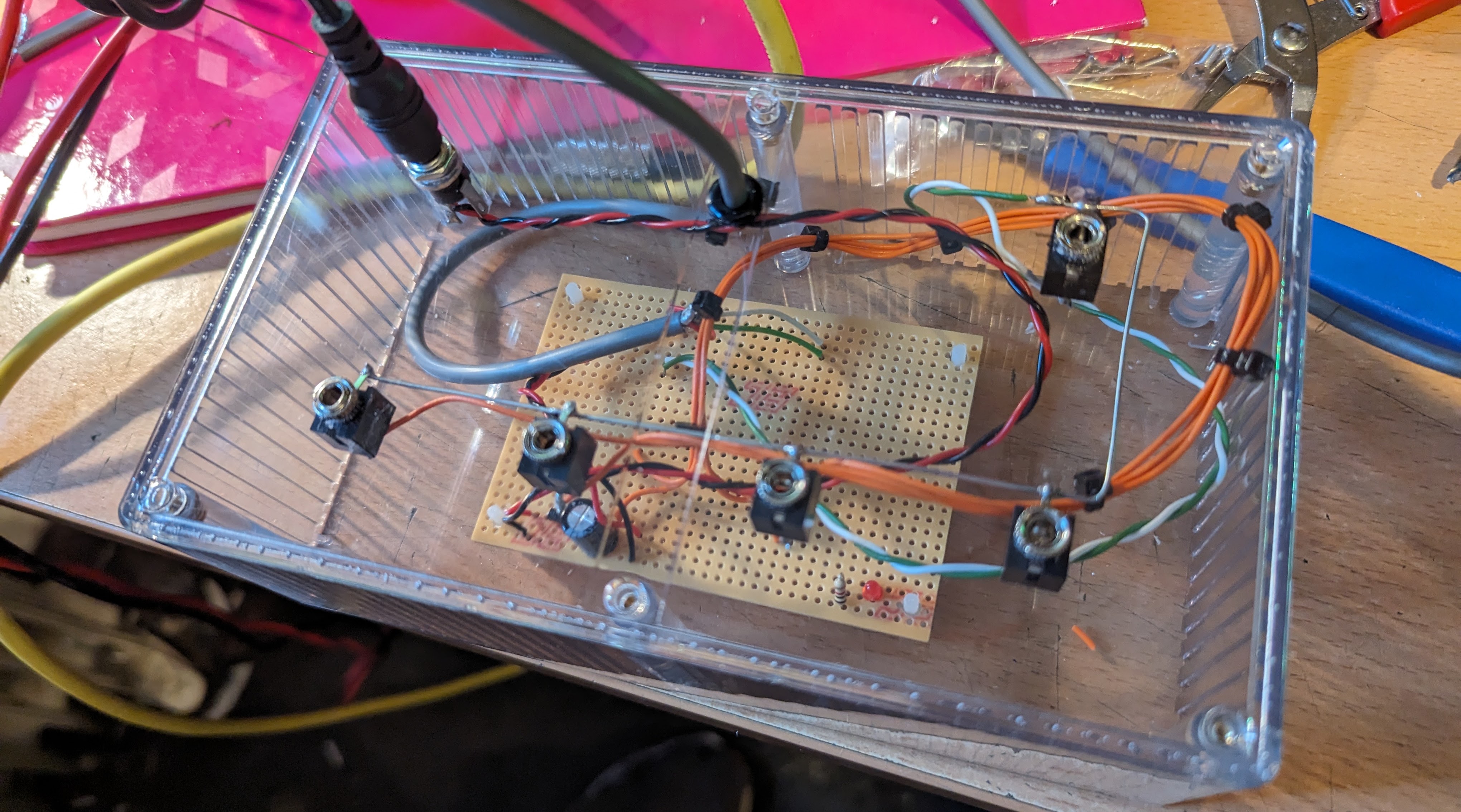

After playtesting the software version at The Brighton Indie Gamedev social, I was convinced that the game was fun and started thinking about hardware. I expected it to be a lot of work to get running on the Pico, especially as I’d allowed myself to joyride some relatively new C++ features like lambdas and auto parameter types, but g++ happily took my fancy code and cross compiled it without any complaints. I could also use PuTTY to connect to the Pico as a USB serial device to see the ASCII output, which was incredibly useful while testing the hardware without the LED display and then for debugging problems while getting the display working.

I ended up with a hardware main function (which wired the inputs up to the Pico pins and redirected the output to the USB serial interface), a terminal main function (which used some hacks to get unbuffered keyboard presses) and renderTerminal and renderRgb methods implemented by the game objects. The rest of the code was shared between the two builds, allowing me to quickly develop, test and debug the game using VS Code and gdb, then cross compile and drag a binary to the Pico mounted as a USB drive.

In May I got the chance to play 1D Pong at Jason’s studio which made me realize how important audio is when you only have a 1D display: the sounds of balls bouncing off bats and points being scored did a lot to explain what was going on, so I started thinking about adding sound to 1D battle royale. The Pico doesn’t have a digital to analogue converter, but some experimentation showed that it can generate some gloriously grungy sounds by using pulse width modulation to play samples using one of the digital outputs. After aggressively compressing some of ProjectsU012’s arcade sounds and converting them to 8 bit samples stored in header file arrays, the game came to life. Instead of being a quirky, abstract 1D game it was a battle royale complete with missiles whooshing and exploding and players powering up and teleporting around.

I haven’t made a game in a long time and I’ve never made a hardware game, so this project has been a lot of fun. It’s also something that I couldn’t have done without Jason, Jo and everyone at the Brighton Indie Gamedev socials: thank you to everyone for your help and feedback.

“Battle Lines: Arcade Machine” (BL:AM) was included in the Pop Up Arcade at the Dreamy Place Festival in Crawley in October 2023 where I had lots of fun playing it and talking about the design and development. You can find more gameplay footage here.

How (Not) to Build a Metaverse

Earlier in the year I helped Josh Sanburn and his team put together a podcast series on building Second Life for the Wall Street Journal called “How To Build a Metaverse” which I’m now really enjoying. It’s great to hear all of the amazing stories about the origin …

read moreVirtual Worlds, Real People

Last week I gave a lab talk to my former research colleagues at the Mixed Reality Lab at the University of Nottingham about the work I’ve been doing since leaving the lab over 20 years ago. Rather than talk about technology I focussed on the lessons that todays efforts …

read moreVirtual Objects You Can Touch

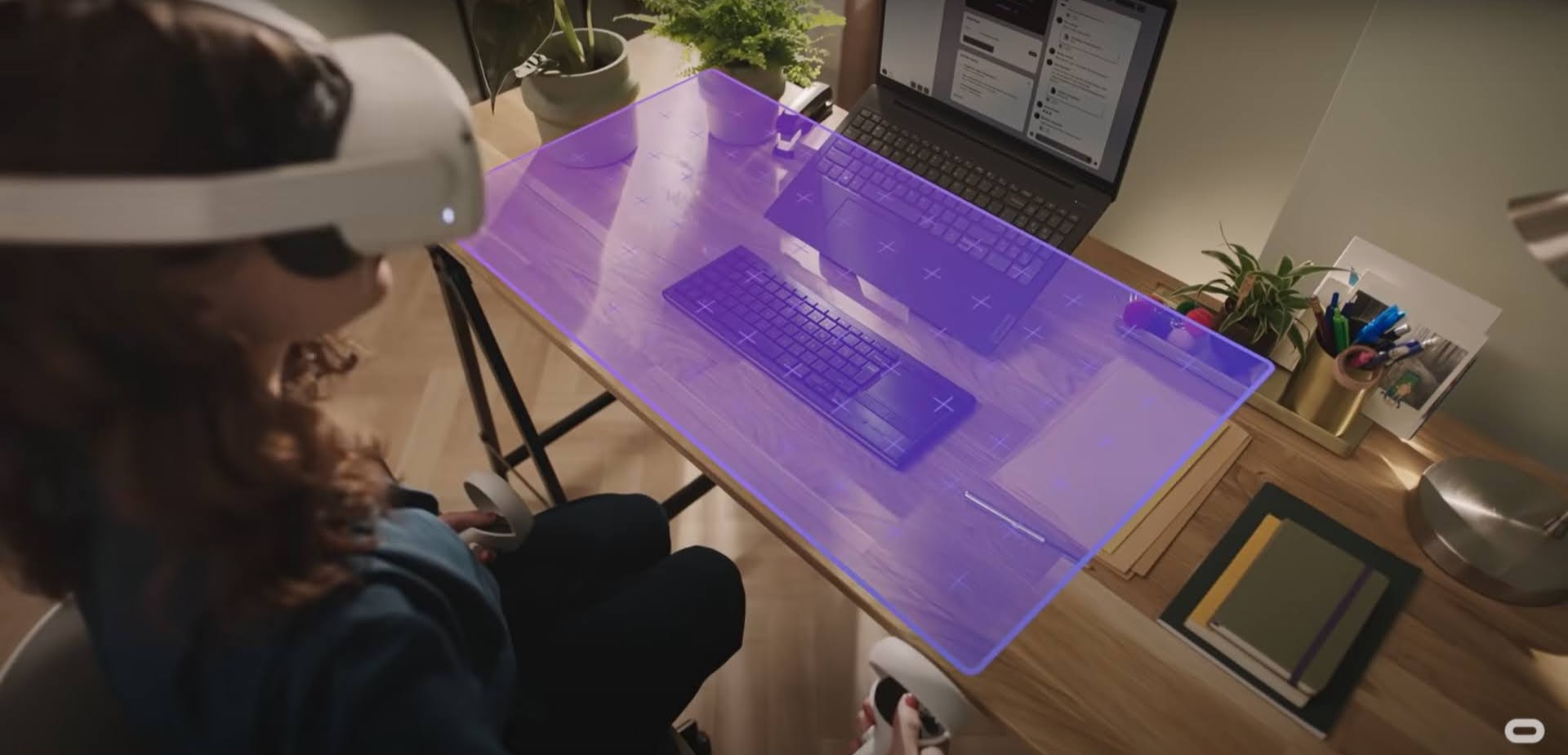

Now that Horizon Workrooms has launched I’m very happy to be able to write about the functionality that I found most exciting while building the experience: the mapping of virtual objects to their real world counterparts.

Typically augmented and mixed reality experiences overlay real world objects with virtual annotations …

read moreThe Art Of Social VR

The recording of my recent Stereopsia 2020 talk about the art of designing social VR experiences is now online. The talk summarises a lot of material covered in more depth in my posts on The Conversation Around Content, A Tall Dark Stranger and Small Places Loosely Joined, so if please …

read moreA Past And Present Future Of Work

Over the last few years I’ve spent a lot of time helping people new to virtual worlds learn how they work. Over the last few weeks I’ve been sharing a series of short posts on some of the high level concepts I covered which will hopefully be useful …

read moreSmall Places Loosely Joined

Over the last few years I’ve spent a lot of time helping people new to virtual worlds learn how they work. Over the next few weeks I’m sharing a series of short posts on some of the high level concepts I covered which will hopefully be useful to …

read moreA Tall Dark Stranger

Over the past few years I’ve spent a lot of time helping people new to virtual worlds understand how they work. Over the next few weeks I’m going to share a series of short posts on some of the high level concepts I covered which will hopefully be …

read moreThe Conversation Around Content

Over the last few years I’ve spent a lot of time helping people new to virtual worlds learn how they work. Over the next few weeks I’m going to share a series of short posts on some of the high level concepts I covered which will hopefully be …

read moreHTTPS

Before my recent post about leaving Facebook, it had been a while since I’d updated The Creation Engine and it turned out I had some housekeeping to do. After pushing the Pelican output to https://github.com/jimpurbrick/jimpurbrick.github.com I got a mail from GitHub saying that …

read more0 to 1

8 years ago London was hosting the Olympics and I met Philip Su for the first time at Browns in Covent Garden to talk about the engineering office Facebook was planning to open in London. By the end of this year Facebook London will have thousands of people working in …

read moreThis blog is 10

Just over ten years ago I set up The Creation Engine No. 2 after previously blogging on the original Linden Lab hosted Creation Engine and before that on Terra Nova. So, while I’ve been blogging for almost 14 years, 10 years of The Creation Engine No. 2 seems like …

read moreReplicated Redux: The Movie

The recording of my recent React Europe talk about Replicated Redux is now online and I’ve written several other posts describing designing, testing and generalising the library if you would like to know more about the details. If you’d like to play the web version of pairs or …

read moreReplaying Replicated Redux

While property based tests proved to be a powerful tool for finding

and fixing problems with ReactVR

pairs,

the limitations of the simplistic clientPredictionConstistenty

mechanism remained.

It’s easy to think of applications where one order of a sequence of actions is valid, but another order is invalid. Imagine an …

read moreBuilding Safety in to Social VR

Last year I hosted a panel on creating a safe environment for people in VR with Tony Sheng and Darshan Shankar at OC3. I commented at the time that the discussion reminded me of the story of LambdaMOO becoming a self-governing community told by Julian Dibbell in My Tiny Life …

read moreTesting Replicated Redux

Opening a couple of browser windows and clicking around was more than sufficient for testing the initial version of ReactVR pairs. Implementing a simple middleware to log actions took advantage of the Redux approach of reifying events to allow a glance at the console to reveal precisely which sequence of …

read moreReactVR Redux Revisited

There were a couple of aspects of my previous experiments building networked ReactVR experiences with Redux that were unsatisfactory: there wasn’t a clean separation between the application logic and network code and, while the example exploited idempotency to reduce latency for some actions, actions which could generate conflicts used …

read moreGeneration JPod

I’ve just got back from Kaş where I spent a lovely few days celebrating Pinar and Simon’s wedding and while there spent a few hours reading Now We Are 40: a thoughtful and entertaining look at everything from house music to house prices from the perspective of Generation …

read more2² Decades

Several years ago when we were in 100 robots together, Max was celebrating his 40th birthday. When I said that mine would be in 2017, it felt like an impossibly far future date, but, after what feels like the blink of an eye, here we are.

Along with many other …

read moreVR Redux

Mike and I have been talking about how to easily build simple networked social applications with ReactVR for a while, so I spent some time hacking over the Christmas break to see if I could build a ReactVR version of the pairs game in Oculus Rooms. Pairs is simple and …

read more The Creation Engine No. 2

The Creation Engine No. 2